The Hidden Math of Throughput: Why Busy Teams Deliver Less

- RESTRAT Labs

- 13 minutes ago

- 12 min read

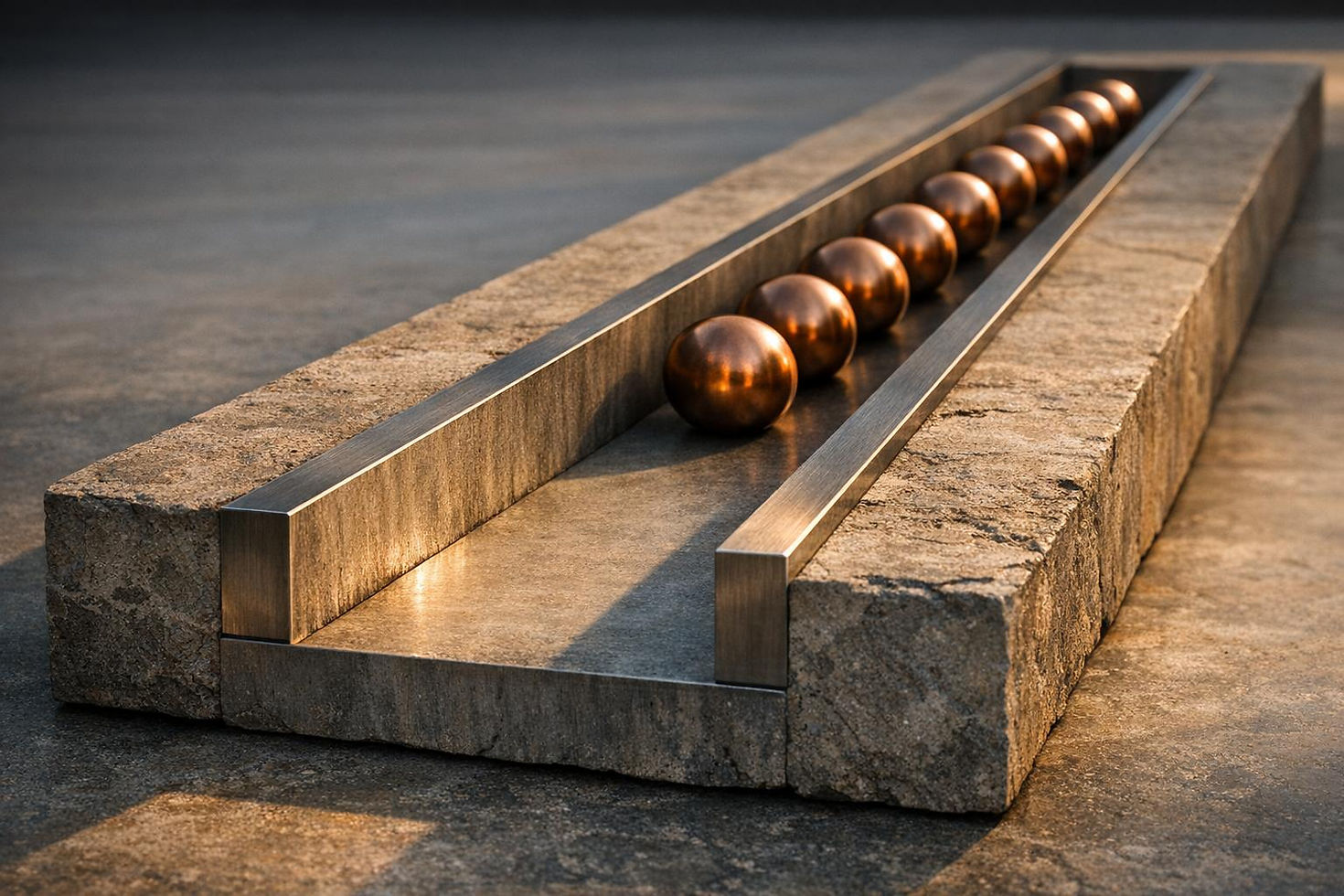

When teams operate at full capacity, productivity doesn’t increase - it collapses. The math is simple but often overlooked: as workload utilization approaches 90%, delays grow exponentially. A task that should take one day can stretch to ten days. At 95%, it’s twenty days. This isn’t about effort - it’s how overloaded systems behave.

Here’s the fix: focus on designing workflows that flow instead of busyness. Limit work-in-progress (WIP), leave buffer capacity (20–30%), and address bottlenecks. Teams running at 70–85% utilization deliver faster, with fewer delays and better results. Overloading doesn’t speed things up - it slows everything down.

Episode 67 WIP Limits and Team Capacity

Why High Utilization Doesn't Mean High Output

The Utilization Trap

It's easy to think that a jam-packed calendar or fully occupied team signals peak efficiency. After all, if every resource is busy, the business must be performing at its best, right? Not quite. In areas like knowledge work, service operations, and project-based businesses - where tasks are unpredictable and interruptions are common - pushing utilization above 80% can cause a system-wide breakdown.

Here’s the reality: at 80% utilization, wait times can double or even quadruple. Push that to 90%, and delays spike nearly tenfold. At 95%, they explode to nearly twentyfold levels [1]. These aren't just small hiccups; they’re massive slowdowns. Unlike factory settings with fixed tasks, knowledge work suffers from these exponential delays because tasks shift unpredictably. Even slight increases in workload can overwhelm the system, creating queues that spiral out of control. The problem isn’t a lack of effort - it's that high utilization leaves no room for normal fluctuations.

When teams operate near 95% utilization, they’re forced into constant context switching, which further drags down output. Research shows that every context switch can cost 10 to 20 minutes of focused time [2]. So, even if everyone looks busy, the actual productivity takes a nosedive.

The sweet spot for most knowledge work lies between 70% and 85% utilization [2]. Operating at around 75% utilization can deliver up to five times more economic value compared to running at 95%. Why? Lower utilization reduces cycle times, improves work quality, and leaves room for problem-solving and creativity. As Goldratt’s theories on constraints suggest, local efficiency - keeping everyone busy - doesn’t guarantee overall system performance. High-stakes industries like nuclear power plants and emergency departments understand this well, deliberately keeping utilization between 50% and 75% to handle variability and avoid disasters [2].

This highlights a key takeaway: managing the flow of work is far more important than just keeping everyone busy.

Flow vs. Busyness

The delays caused by overutilization make one thing clear: true productivity comes from maintaining flow, not just keeping people occupied. Organizations focused solely on utilization often treat their teams like assembly lines, assuming 100% utilization equals maximum efficiency [2]. But in systems prone to variability, throughput relies on smooth workflows - not packed schedules.

Flow-driven organizations focus on completing tasks rather than just starting new ones. They use Work-in-Progress (WIP) limits to avoid bottlenecks and intentionally leave some capacity free to ensure speed and predictability [2].

The contrast between these approaches couldn’t be sharper. Utilization-focused teams often face overloaded schedules, long delays, and erratic delivery times. Flow-focused teams, on the other hand, build in buffer capacity, maintain steady cycle times, and deliver consistently. The table below breaks down how system performance changes as utilization increases:

Utilization Rate | Average Queue Size | Cycle Time Multiplier | System State |

50% | 1.0 | 2x | Smooth Flow |

70% | 2.3 | 3.3x | Sustainable Pace |

80% | 4.0 | 5x | Warning Zone |

90% | 9.0 | 10x | Velocity Collapse |

95% | 19.0 | 20x | Crisis Mode |

This table drives home a crucial point: chasing high utilization doesn’t lead to faster results. In fact, it often causes the opposite - longer delays and poorer performance. The focus should shift to maintaining flow, as it prevents the breakdowns seen in overloaded systems. The next section will explore the math behind these patterns in greater depth.

The Math Behind Throughput

Little's Law: WIP, Throughput, and Lead Time

The connection between work-in-progress (WIP), throughput, and lead time might not seem obvious at first glance, but it's grounded in precise math. Little's Law explains that the number of active tasks (WIP) multiplied by the completion rate determines the average lead time. For example, if you have 20 tasks in progress and complete 2 per week, the average lead time is 10 weeks [3].

Here’s the catch: increasing WIP doesn’t make things faster - it actually slows them down. When WIP grows while throughput stays the same (because you’re already maxed out), lead times get longer. A team managing 40 tasks instead of 20 won’t work twice as fast; in fact, each task ends up taking twice as long. As one expert put it:

"Little's Law isn't just a formula, it's a mindset. It teaches us that improvement doesn't come from pushing more work into the system, but from making workflow smoothly through it."Center for Lean [3]

The situation worsens as utilization climbs past 80%. At 90% utilization, cycle times can spike by 10×, and at 95%, they can jump to 20× [1]. A task that requires just one day of actual work might take 20 days to complete because of the time it spends sitting in a queue. High WIP acts like invisible inventory - it hides inefficiencies, delays revenue, and makes timelines unpredictable [4]. Interestingly, teams that implemented strict WIP limits saw development times shrink by 30% to 50%, all without adding extra effort [4]. With this in mind, let’s explore how constraints further impact throughput.

Constraints and Bottlenecks

Beyond the delays caused by WIP, bottlenecks play a key role in limiting a system’s throughput. Eliyahu Goldratt's Theory of Constraints highlights why trying to keep everyone busy can backfire. Every system has a bottleneck - the slowest step that determines the overall capacity. When non-bottleneck resources are overloaded, they simply add to the pile of work waiting at the bottleneck, increasing lead times without boosting throughput [4]. It’s like speeding up an assembly line only to have everything jam up at the slowest station.

The bottleneck defines the system’s capacity. For instance, if your bottleneck can handle 10 jobs per week, releasing 15 jobs into the system won’t increase output. Instead, it creates a queue, tying up resources and delaying other opportunities [6]. When utilization at the bottleneck nears 100%, even minor disruptions - like a sick team member or a late shipment - can snowball into permanent delays because idle time during slower periods can’t be saved for busier times [1].

Large backlogs at bottlenecks also kill urgency. When tasks sit idle for weeks, teams often lose momentum, which lowers actual throughput [1]. The solution isn’t to push harder but to control the flow of work, releasing tasks at a rate the bottleneck can handle. Surprisingly, a team working at 75% utilization can deliver up to 5× more economic value than one at 95%, simply because faster delivery reduces the cost of delays [1]. For small and medium-sized businesses (SMBs), overloading schedules doesn’t just slow things down - it clogs bottlenecks, creates backlogs, and disrupts cash flow.

Variability Under Load

On top of bottlenecks, variability within a system makes delays even worse, compounding their effect on cycle times. In areas like knowledge work or service operations - where task durations and arrival rates are unpredictable - random fluctuations can’t just "even out" [2]. When demand temporarily exceeds capacity, backlogs grow quickly, and the system struggles to recover during quieter periods because capacity is fixed [2].

As utilization (ρ) approaches 100%, the impact of variability becomes exponential. Average queue length grows roughly in proportion to ρ divided by (1 – ρ) [2]. At 90% utilization, a 1-day task might take 10 days to complete due to queuing delays, and at 95%, it could stretch to 20 days [1]. Each small increase in utilization doesn’t just slow things down - it can grind the entire system to a halt.

Large batches of work make this problem worse. A single massive project introduces more variability than breaking the same effort into smaller chunks, leading to a sharp rise in cycle times [4]. Early variability - like unclear requirements at the start of a project - tends to ripple through every subsequent step [4]. The best way to manage this instability is by maintaining reserve capacity. High-stakes environments, such as nuclear power plants, deliberately operate at 50% to 75% utilization to handle variability without descending into chaos [2]. Similarly, SMBs benefit from keeping a 20% to 30% buffer - not as waste, but as a safeguard to keep systems stable when reality doesn’t match the plan.

How Overload Reduces Output in SMBs

Building on the math and theory of overload, let’s explore how these dynamics play out in the day-to-day operations of small and medium-sized businesses (SMBs).

Example: Overlapping Jobs in a Trade Business

Imagine a contractor juggling five overlapping jobs at 90% utilization. At first glance, this might seem like an efficient way to maximize revenue. But the reality is far different. At such high utilization, cycle times balloon to 10 times the actual work duration [1]. A task that should take two days to complete now stretches to 20 days, mostly due to delays caused by dependent activities waiting in line.

Here’s what happens: permits delayed for Job A hold up Job B. Materials for Job C arrive, but the crew is still tied up on Job D, leaving those materials unused and cash tied up. Crews moving between three sites in a single day lose hours to travel and setup, creating unnecessary disruptions. These inefficiencies pile up, causing throughput to plummet while lead times double.

Now, compare this to a contractor who limits active jobs to three, maintaining a 75% utilization rate. With fewer jobs in progress, each one moves through the system faster. Projects finish sooner, invoices go out earlier, and cash flows improve. Customers are happier because deadlines are met. By keeping 20–30% buffer capacity, the same crew delivers five times more economic value than their overloaded competitor [1]. The key isn’t working harder - it’s finishing one job before adding another. This example highlights how exceeding safe operational limits leads to predictable delays, as outlined by the mathematical principles discussed earlier.

Owner Overload and Scheduling Breakdown

While job scheduling for crews is one part of the equation, owner-operators often face a similar overload problem. Picture an owner who handles every detail: estimating, supervising job sites, negotiating with suppliers, and invoicing. When they schedule every minute of their day, they enter what queuing theory calls a "high-queue state" [1]. At nearly 100% utilization, even a small disruption - like a delayed supplier call or an unexpected issue on-site - creates a backlog that snowballs. There’s no buffer to absorb these disruptions, and idle time during quieter periods can’t be stored to catch up later [2].

This isn’t just a time management issue - it’s a design flaw in the system. The owner becomes the bottleneck, and every task waiting for their input grinds to a halt. Invoices are delayed, decisions take longer, and scheduling errors multiply because there’s no time to think through the details. The outcome is inevitable: more effort results in less output, cash cycles drag on, and the business constantly feels behind, despite being busy. As predicted by Little’s Law and Goldratt’s theories, overloaded systems - whether in crew scheduling or owner decision-making - consistently undermine productivity and throughput.

Designing for Flow Instead of Utilization

Fixing throughput collapse isn’t about working harder or throwing more resources at the problem. The real solution lies in redesigning systems to focus on flow rather than just keeping everyone busy. This means managing constraints, setting clear work-in-progress (WIP) limits, and maintaining a buffer to handle variability without the system breaking down. These ideas are rooted in the theories of Goldratt and Little, which emphasize the dangers of overloaded systems and the importance of prioritizing flow.

Core Principles for Flow

Three key principles guide the shift to a flow-focused approach:

1. Set explicit WIP limits. Instead of letting tasks pile up endlessly, put a cap on the number of active tasks at any given time. A good starting point? Limit each person to no more than two active tasks [1]. For small and medium-sized businesses (SMBs), this could mean restricting the number of simultaneous jobs or estimates being handled. This prevents the queues that slow everything down from growing out of control.

2. Focus on the constraint, not the load. Every system has a bottleneck - the slowest point that dictates overall throughput. Goldratt’s work shows that adding capacity elsewhere won’t help unless the bottleneck is addressed. For instance, if permitting is the bottleneck in a trade business, hiring more crews won’t speed things up. Instead, efforts should go toward moving permits through faster while keeping slack in other areas to avoid cascading delays [5].

3. Protect buffer capacity for critical tasks. Keep teams working at 70–75% of their total capacity, leaving 25–30% unallocated [1]. This isn’t wasted time - it’s a buffer to handle urgent issues, absorb disruptions, and keep things running smoothly even when variability occurs.

SMB Studio Application: Stabilizing Operations

Applying these principles in SMB operations starts with managing the flow of work. Rather than juggling multiple overlapping projects, focus on completing fewer tasks at a time. Breaking larger jobs into smaller, manageable chunks (1–3 days) makes workflows more predictable and reduces the impact of variability [1] [4]. Tools like Kanban boards or physical markers can make WIP visible, which helps enforce limits and prevent overload [1] [6].

Scheduling also plays a critical role. Assign one team member to handle unplanned urgent requests on a rotating basis. This allows the rest of the team to stay focused on their primary tasks [1]. For owner-led businesses, it might mean dedicating specific time blocks to client meetings or estimating, rather than scattering these tasks throughout the week. The goal is to stabilize the flow of work coming in, reducing the variability that often leads to delays.

These changes not only streamline daily operations but also pave the way for measurable improvements.

Outcomes of Flow-Centered Systems

Organizations that prioritize flow over constant utilization see clear benefits. Work moves faster, queues shrink, and throughput increases. For example, businesses that implement effective queue management have cut average development times by 30% to 50% [4]. Shorter cycle times translate to quicker invoicing, better cash flow, and fewer last-minute crises that damage customer trust.

Research from McKinsey and Bain highlights how speed drives success, with flow being the mechanism to achieve it. Operating below maximum capacity not only speeds up delivery but also enhances economic value. For most knowledge work, the optimal utilization rate falls between 70% and 85% [2]. When demand becomes unpredictable, companies that design for throughput consistently outperform those that confuse busyness with productivity.

Conclusion

The numbers don’t lie: pushing utilization to 100% doesn't improve output - it causes it to collapse. Overloading systems leads to exponential delays and unpredictable results. In fact, busy systems often produce less because the additional strain only compounds the slowdown.

The real answer isn’t about cramming more into already packed schedules. It’s about designing systems that prioritize smooth flow. By applying principles like Goldratt's theory of constraints and Little's Law, you can counteract the chaos of high utilization. Strategies such as managing bottlenecks, setting clear work-in-progress (WIP) limits, and maintaining buffer capacity can transform unpredictable operations into reliable, steady ones. A team operating at 75% utilization can deliver up to five times more economic value than one running at 95%, simply by eliminating the delays that sap velocity [1]. These aren’t just theories - they reflect how overloaded systems behave in the real world.

For small businesses and large enterprises alike, the advantage goes to those who treat throughput as a system property, not an issue of individual effort. Take the example of small businesses juggling overlapping jobs: when work piles up, progress stalls. A systems-focused approach, on the other hand, ensures efficiency by keeping work flowing. Especially when demand fluctuates, businesses that optimize for flow will consistently outperform those that mistake packed schedules for productivity. Predictable delivery not only strengthens customer trust but also safeguards profitability.

The choice is straightforward: stick with high utilization and endure hidden delays, or redesign for flow and achieve meaningful results. Flow always outperforms utilization - it directs effort into systems built to deliver, not just stay busy.

Calm, well-functioning operations aren’t just nice to have - they’re a competitive advantage grounded in the principles of work flow.

FAQs

How do we know our team is overloaded?

When a team is stretched too thin, it doesn't take long for the cracks to show. Missed deadlines, forgotten meetings, and overlooked details are clear red flags pointing to mental strain. You might also notice team members struggling with increased stress, confusion about priorities, or a noticeable dip in engagement and productivity.

Physical signs can creep in too - fatigue and distraction often go hand in hand with being overworked. These warning signals shouldn't be ignored. Addressing them quickly can help prevent further strain on performance and overall output.

What’s a good WIP limit for a small team?

For small teams, a Work In Progress (WIP) limit of 1 to 2 items is often ideal. Keeping the WIP low helps avoid overwhelming team members, shortens lead times, and boosts workflow efficiency. This aligns with concepts like Little’s Law, ensuring tasks progress steadily without creating bottlenecks or unnecessary delays.

How do we find and manage our bottleneck?

To spot a bottleneck, pay attention to areas where tasks pile up or progress slows significantly - this is usually the main restriction point. Once you've identified it, take steps to manage it effectively. Start by limiting work-in-progress (WIP) to prevent overwhelming the bottleneck. Make sure it operates at full capacity but without overloading it. Adjust the surrounding processes to ensure a smoother workflow. The key is to prioritize improving throughput - how efficiently work moves through the system - rather than simply keeping everyone busy. This approach helps minimize delays and ensures consistent performance throughout the process.